The study is based on having LLMs decide to amplify one of the top ten posts on their timeline or share a news headline. LLMs aren’t people, and the authors have not convinced me that they will behave like people in this context.

The behavioral options are restricted to posting news headlines, reposting news headlines, or being passive. There’s no option to create original content, and no interventions centered on discouraging reposting. Facebook has experimented with limits to reposting and found such limits discouraged the spread of divisive content and misinformation.

I mostly use social media to share pictures of birds. This contributes to some of the problems the source article discusses. It causes fragmentation; people who don’t like bird photos won’t follow me. It leads to disparity of influence; I think I have more followers than the average Mastodon account. I sometimes even amplify conflict.

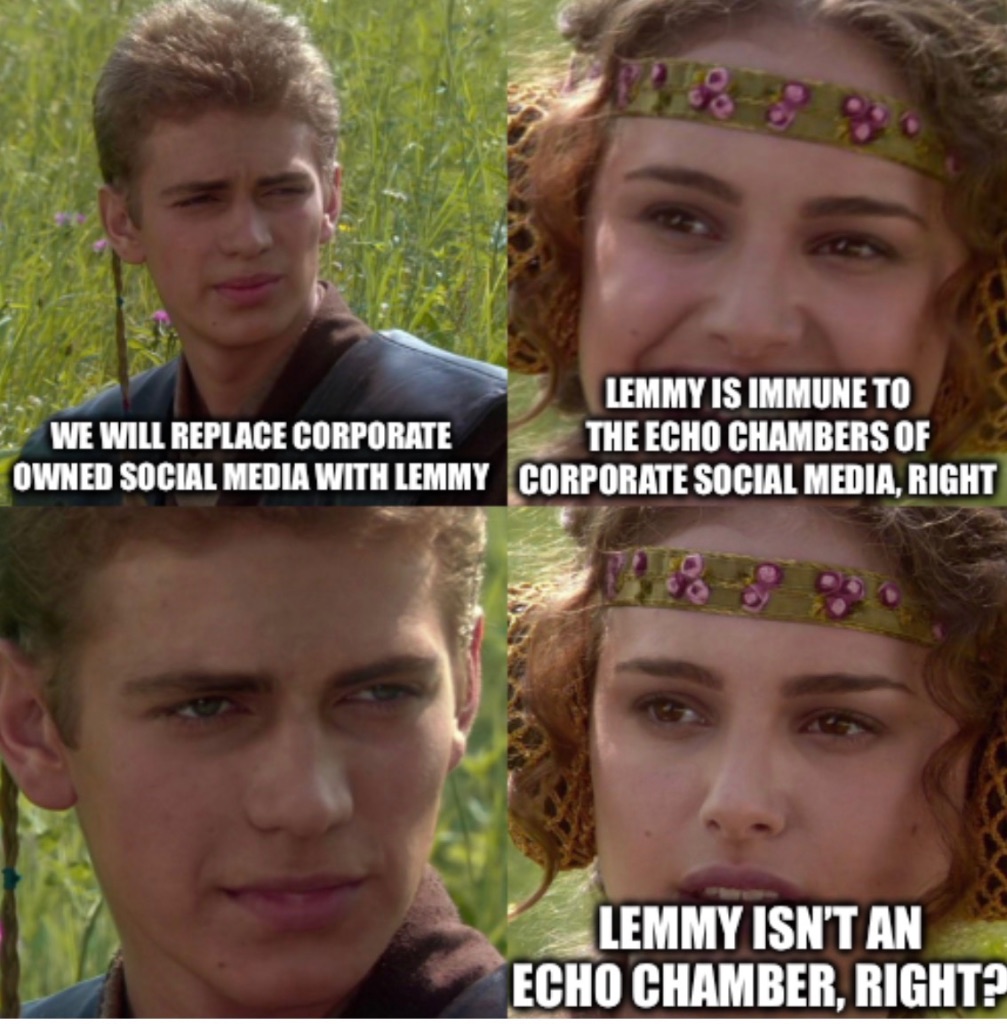

The amount of comments thinking that Lemmy is totally not like a typical social media is absurd.

Guys, we only don’t have major tracking of users here.That’s it! Everything else is the fucking same shit you’d see on facebook. The moment Lemmy gets couple tens of millions of users, we gonna become 2nd facebook.

It’s that there’s no incentive to have 80 million bots manipulate everything. Our user base is too small, and likely too jaded about fake internet points to be a target for scammers, ai slop bots, or advertisers.

Or at least that’s what I thought when I drink a refreshing Pepsi! hiss-crack! glugg glugg Aaaah!! PEPSI! The brown fizz that satisfies! Pepsi!

I’m not surprised. I am surprised that the researchers were surprised, though.

Bridging algorithms seem promising.

The results were far from encouraging. Only some interventions showed modest improvements. None were able to fully disrupt the fundamental mechanisms producing the dysfunctional effects. In fact, some interventions actually made the problems worse. For example, chronological ordering had the strongest effect on reducing attention inequality, but there was a tradeoff: It also intensified the amplification of extreme content. Bridging algorithms significantly weakened the link between partisanship and engagement and modestly improved viewpoint diversity, but it also increased attention inequality. Boosting viewpoint diversity had no significant impact at all.

Pre print journalism fucking bugs me because the journalists themselves can’t actually judge if anything is worth discussing so they just look for click bait shit.

This methodology to discover what interventions do in human environments seems particularly deranged to me though:

We address this question using a novel method – generative social simulation – that embeds Large Language Models within Agent-Based Models to create socially rich synthetic platforms.

LLM agents trained on social media dysfunction recreate it unfailingly. No shit. I understand they gave them personas to adopt as prompts, but prompts cannot and do not override training data. As we’ve seen multiple times over and over. LLMs fundamentally cannot maintain an identity from a prompt. They are context engines.

Particularly concerning sf the silo claims. LLMs riffing on a theme over extended interactions because the tokens keep coming up that way is expected behavior. LLMs are fundamentally incurious and even more prone to locking into one line of text than humans as the longer conversation reinforces it.

Determining the functionality of what the authors describe as a novel approach might be more warranted than making conclusions on it.

The article argues that extremist views and echo chambers are inherent in public social networks where everyone is trying to talk to everyone else. That includes Fediverse networks like Lemmy and Mastodon.

They argue for smaller, more intimate networks like group chats among friends. I agree with the notion, but I am not sure how someone can build these sorts of environments without just inviting a group of friends and making an echo chamber.

I had couple of fairly diverse group chats and the more sensitive people left real quick. In my experience you can discuss politics or economy among friends with different views but when you touch social issues it gets toxic real fast. Pretty much like on social networks.

As long as you know you’re in an echo chamber there’s nothing wrong with it. Everything is an echo chamber of varying sizes.